When the site became slow, I asked ChatGPT to find the reason. Its reply? ChatGPT was the cause!

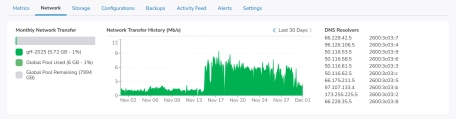

During late November 2025 this site was severely slowed down by a sudden increase in traffic. The incoming requests probably rose by a factor 10 overnight and for a couple of weeks the traffic continued on this level, and was blocking the server creating all kinds of trouble.

I had constant high CPU-loads, the server totally filling up with cache files, really slow response times, the database constantly clogging up and hanging, and other annoying effects.

I asked Linode, the company that provides the server, if they could see anything, and they saw a rise in traffic, but no attacks as such, like a DDOS-attack or the like. Apart from that they couldn’t help. Since it was within what can normally be expected, the problem was mine to solve.

So I turned to ChatGPT and asked how I would diagnose the problem.

The AI led me through a bunch of fairly complex Linux-commands and analyzed each, leading me on to new ones, and could finally draw a conclusion.

And the conclusion?

Bot traffic, mainly from ChatGPT itself!

Bot traffic, mainly from ChatGPT itself!

The site was being visited by the bots that gather information for the LLM’s (Large Language Models) that drive various AI’s including ChatGPT’s own, Anthropic's (Claude), Meta's (Facebook) and more.

These bots were fetching pages on GFF at a rate of dozens per second, which is way too often. Good bot behavior is of course going slow, and not weighing down sites!

My way out of this was to block them before they could get to any content, and that was quite efficient. I already had them blocked in the robots.txt file on the site, but this is just a request to stay out – not a technical blocking.

Now they are blocked from entering, and the major AI’s are no longer training on GFF content.

AI is indeed a double edged sword, and this incident clearly shows the problem: on one hand they can solve problems, that some of us humans might have trouble handling. I could not have done this without a Linux-expert by my side. On the other hand they do this by utilizing all the knowledge out there already, and that obviously sometimes means over-utilizing.

But ChatGPT solved my problems by shutting out itself, and the site is now as fast – and probably even faster – than ever, and I’m happy.

The way there

And for those (few, I hope) of you facing - and having to handle - similar problems, I asked the AI to make me a summary of what it made me do, and this was it:

-

Identified abnormal connection behavior

- Used

ss -tnpto inspect current TCP connections. - Observed many CLOSE-WAIT sockets with large queues, indicating connections not being closed properly and workers being held too long.

- Used

-

Analyzed traffic volume and pattern

- Parsed Apache access logs to compute requests per hour.

- Found spikes around ~100,000 requests per hour, confirming a significant traffic surge.

-

Discovered bot-dominated traffic as the main driver

- Extracted top User-Agent strings from Apache logs.

- Found very high counts from crawlers such as:

- GPTBot (OpenAI) ~866k requests

- meta-externalagent (Meta crawler) ~103k requests

- Baiduspider family ~77k requests

- Claude-SearchBot (Anthropic), Applebot, DataForSeo, BLEXBot, etc.

- Concluded that the spike was primarily caused by AI/search/SEO bots, not human visitors.

-

Implemented bot blocking

- Updated

robots.txtto explicitly disallow known AI, SEO, and aggressive international crawlers. - Added Apache

.htaccessrules usingmod_rewriteto return 403 Forbidden to:- GPTBot, Claude-SearchBot, meta-externalagent

- Baiduspider, Bytespider, SemrushBot, DataForSeoBot, BLEXBot, and others.

- Observed an immediate reduction in abusive bot traffic.

- Updated

-

Diagnosed Apache worker saturation

- Checked

/var/log/apache2/error.logfor MPM errors. - Found repeated messages:

server reached MaxRequestWorkers setting, consider raising the MaxRequestWorkers setting

- Confirmed that Apache’s mpm_prefork worker pool was hitting its concurrency limit and causing slow responses.

- Checked

-

Measured Apache process memory usage and considered available RAM

- Used

ps -ylC apache2 --sort:rssto inspect Apache child memory usage. - Found each worker using roughly 16–18 MB RSS.

- Verified with

free -mthat the server has 16 GB RAM with plenty of free and cached memory.

- Used

-

Tuned Apache prefork MPM settings

- Edited

mpm_prefork.confto better match available resources. - Adjusted key values to:

MaxRequestWorkers 250(up from 150, leveraging 16 GB RAM safely)MaxConnectionsPerChild 2000to periodically recycle workers and avoid memory bloat

- Aim: allow more concurrent requests without hitting the worker ceiling or causing swap thrashing.

- Edited

-

Optimized connection and timeout behavior

- Added/tuned global connection settings in

apache2.conf:KeepAlive OnMaxKeepAliveRequests 100KeepAliveTimeout 2(reduced from 5 to free workers faster)Timeout 60(reduced from the much higher default)

- Enabled and configured

mod_reqtimeoutto protect against slowloris-style attacks and very slow clients:RequestReadTimeout header=10-20,MinRate=500 body=10,MinRate=500

- Goal: ensure workers are not held for excessive time by idle or misbehaving clients.

- Added/tuned global connection settings in

-

Verified overall resource health and stability

- Confirmed that memory usage remained healthy and stable with:

- High

availablememory (~13+ GB) - Minimal and stable swap usage (~253 MB)

- High

- Concluded that, with increased worker limits and stricter timeouts, Apache can now handle legitimate traffic surges more gracefully.

- Confirmed that memory usage remained healthy and stable with:

- Log in to post comments