There’s a bunch of modules to help importing content into Drupal. My experience is that they are inadequate, and don’t do what I need. I prefer DIY.

Nerd alert

This is NOT about fly fishing or fly tying, but about site development and nerdy stuff.I recently had to merge the content of a WordPress site into GFF. The Streamers365.com site was an 800+ WordPress posts site, that was to be intertwined with the existing GFF content. You can see the result here.

The Mighty Migrate Modules

There’s a whole bunch of existing Drupal-modules, which promise a smooth and almost effortless import of content into Drupal. From the massive do-it-all Migration module to the more focused WordPress Migrate module. These are both in the Migrate ecosystem, which also has facilities to fetch content from older Drupal versions, Gallery2, phpBB, Typo3 and more.

I have dipped my toes (OK, actually submerged my whole body) into the ice cold waters of Migrate on several occasions, but unfortunately found it wanting every time. It’s an impressing module with a gazillion facilities, but it hasn’t been able to do what I needed on any of my import missions.

Every single time I have tried, and every single time I have wound up coding my own routines.

Directly to DIY

On this particular occasion, I didn’t even fire up Migrate, but ventured directly into a custom module.

Now, WordPress isn’t rocket science… with all due respect. The CMS runs a colossal number of sites and does so very well, but the structure behind – the architecture – is fairly simple and easy to understand.

WordPress works with posts, and a far majority of sites have just that: a long list of posts, recorded in the database, and presented in reverse chronological order in a simple blog form. There are also pages, typically “About us”, “Contact” and such. There are usually no fields, no complex relations, no managed files or other complex structures. There are tags, but again simple in structure and there are a few other odds and ends you might need to get transferred, like comments.

But all is fairly easy to decipher and WP is well documented.

Streamers365.com is/was such a fairly straightforward site with a little more than 800 posts and pages and an equal number of comments.

wp_posts

My primary source for the import was of course the database table wp_posts, which is – you guessed it – the posts. In this you will find posts, pages, revisions and basically all content.

It also contains title, publishing status, dates and much more.

I took the original database from the WP site and imported it into a separate database on my local MySQL-server. I then added it to my Drupal 7 settings, using the option to define several databases in settings.php:

$databases = array (

'default' =>

array (

'default' =>

array (

'database' => 'gff',

'username' => 'gff',

'password' => 'thePassword',

'host' => 'localhost',

'port' => '',

'driver' => 'mysql',

'prefix' => '',

),

),

'streamers' =>

array (

'default' =>

array (

'database' => 'streamers',

'username' => 'gff',

'password' => 'thePassword',

'host' => 'localhost',

'port' => '',

'driver' => 'mysql',

'prefix' => '',

),

),

);

This construction allowed me to shift between databases, and read from one while writing to the other.

So reading all the WP posts was done like this:

db_set_active('streamers');

$query = db_select('wp_posts', 'p')

->fields('p');

$or = db_or()

->condition('post_type', 'page')

->condition('post_type', 'post');

$query

->condition($or)

->condition('post_status', 'publish');

$post_result = $query

->execute();

$posts = $post_result->fetchAllAssoc('ID');

db_set_active();

This shifts to the streamers database and reads all published content from the wp_posts table where type is page or post.

Revisions

Unfortunately that’s not quite enough to read a set of WP-posts. The thing is that WP also saves the revised posts in the same table, where they have the type revision. In order to get the latest version of a post, you have to find the latest revision, which has the post as a post_parent. Again we have to look in the old database and see if there’s a revision.

db_set_active('streamers');

$revision_result = db_select('wp_posts', 'p')

->fields('p')

->condition('post_parent', $post->ID, '=')

->condition('post_date', $post->post_date, '>')

->orderBy('post_date', 'DESC')

->range(0, 1)

->execute();

db_set_active();

This code will select the latest revision for a post found with the code above.

If we find one, we switch the post in the first set of posts with the new revision, and when that’s been done for all posts, we have a set of the latest posts/versions from the wp_posts table.

What remains now is to transfer this to a Drupal node and save it, which ought to be trivial, but in reality was way more complex than I had envisioned.

Shortcodes

First of all this particular WP used some markup in the text, so called shortcodes, which are essentially various tags and codes that needs to be interpreted and translated into HTML before the content can be presented.

This is a common way of adding code to text, and is widely used, especially in WordPress. The image system on GFF uses a type of shortcodes, and systems like the phpBB bulletin board system and many others also utilize shortcode-like constructions.

Drupal does not – at least not as a standard – and I don’t want shortcodes or interpretation of them on the site, so the import script would have to translate them to something more Drupal-like.

Links and images

There were also a ton of absolute links, meaning that in stead of linking to internal pages using internal links, they were with full domain. In other words “http://streamers365.com/a-fantastic-page” rather than “/page_id=123”. The latter will work on a site that has moved to another domain and even work if the beautified URL has been removed, while the first will lead to the old site, and not work. That had to be fixed.

On top of that WP also has the facility of generating short URL’s in the form http://wp.me/J345H3, and for some reason these had also been incorporated into the text as full links. They had to be translated into “real” links, and those pointing to the site itself had to be made local. This was simply done by loading page from the wp.me link using file_get_contents() and looking at the canonical link in the head section of the result. Using PHP’s DOMDocument, that’s fairly simple. I inserted these links into a small database table, and looked in that before asking wp.me for a page. In that way I saved a lot of looking up external pages. Once the link had been read online once, it could be read locally after that.

Last but not least, all the old links to images had to be fixed so that they pointed to the new location of the images within Drupal’s file system, and links to larger images had to have the class colorbox added so that they could pop up nicely.

Minor issues

Add to that a lot of minor issues:

- removal of certain iframed content and old Adobe Flash objects – but keeping iframed YouTube videos.

- removal of a ton of old and/or affiliate links to eBay and Amazon – most not working anymore.

- cleaning out a lot of odd characters and replacing them with something renderable, preferably UTF8.

- fixing a whole lot of linked YouTube videos, which were to be maintained, but properly embedded.

- fishing out captions for the images as well as bios for the authors – all entered as a part of the body text in the posts – and making them separate, controllable fields.

- removing various script snippets, which generated bits of dynamic content like support buttons, links to shops and more.

- fixing a wealth of line breaks in tables, lists and other structures to keep them from “exploding” into endless rows of br-codes, adding unwanted whitespace.

- adding a cover file taken from the article and saved as a managed file, attached to the article’s cover image field.

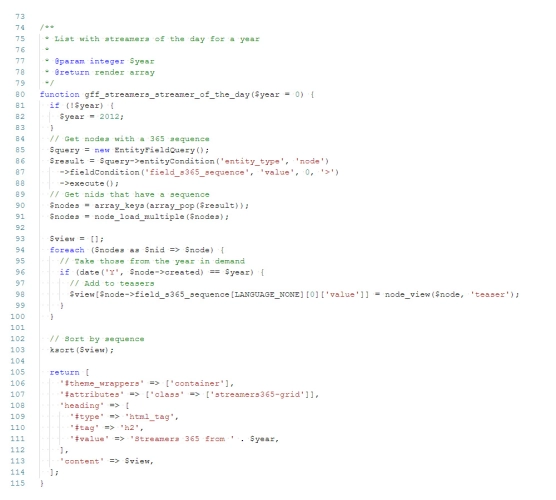

- adding a few functions that will be needed after the conversion, like showing the streamers of the day.

All these things were done using an unholy combination of preg_match() and DOMDocument. Some instances called for one, some for the other.

Finally the comments had to be converted and attached to the newly formed and saved nodes, and the authors had to be created as Drupal users, have their bio added as nodes and connected with the new content.

A 1500 line module

All these things were confined to a single module, in which the main function import_streamers() read all the old posts and their data and created correctly sanitized, formatted and tagged nodes, where essentially all HTML was valid, links were working, images shown and embedded videos were OK.

The module wound up being a little more than 1500 lines, and a complete conversion could be done, 100 posts at a time, in less than 5 minutes on my local development server.

Still, in spite of all the effort put into taking care of every conceivable situation, there were a few manual tasks to be done afterwards, and quite a few nodes that required editing and adjustment after the import to be acceptable.

But, after a lot of coding, testing and preparation, the import script was ready to run on the live server.

Doing it live

I simply copied all the files there, created the streamers database on the live MySQL, backed up the current database and fired up the conversion. It ran fast and smoothly, and now – about a day later, most of the little quirks have been fixed and a few creases have been ironed out by manually going through most articles.

There will still be some tasks left, but in essence all content has been merged, tagged and published and seems to work as intended.

- Log in to post comments