I'm surprised how little web publishers cherish their old URLs. When I browse old links to the outside from our articles, I constantly meet 404-pages. I value GFF’s old web-addresses, and here's how I maintain them.

Nerd alert

This is NOT about fly fishing or fly tying, but about site development and nerdy stuff.A site as old as the Global FlyFisher has been through several generations of publishing systems, and things have been placed all kinds of places in these systems. The original site was a simple directory structure – literally, because it consisted of static HTML-files, stored in a folder on the server.

This technology (or lack of same) was transferred to the second generation of the site, which was in essence the first real incarnation of the Global FlyFisher. The URLs from the first server were impossible to retain since they were on a different domain, but from the first version of the site on globalflyfisher.com, I have tried to keep all old URLs intact.

Since the first system was hardly a system at all, the addresses could look something like

globalflyfisher.com/fishbetter/bubble.htm globalflyfisher.com/tiebetter/thread_control/index.htm globalflyfisher.com/fishbetter/shootingheads/8th-12th.html globalflyfisher.com/patterns/banderillas/index.html

All these were static

HTML files, and as you can see, some were named a specific name while others were index.* files, essentially not needed in the URL because the server was set up to show whatever index file it found in a folder. Some were *.htm, some were *.html.

After we changed to a more automated system, programmed in PHP, the URLs could look like this:

globalflyfisher.com/fishbetter/i-remember-that-fish/salt-pike.php globalflyfisher.com/fishbetter/midnight-sea-trout/index.php globalflyfisher.com/reviews/books/bookbase/reading.php

Some of these *.php files would just be articles, drawing out content from the database, while others would host functions that built and calculated content in different ways, like the last one being a list of the books that were being read for reviews.

To make things worse, I also had some general function PHP scripts, which took arguments, like the blog or the book reviews. They would look something like this:

globalflyfisher.com/reviews/books/bookbase/show_single.php?id=123 globalflyfisher.com/blog/?id=123 globalflyfisher.com/blog/?day=2003-08-28

So as I moved the site

to Drupal, all these URLs were lost. Drupal offers nice, clean URLs out of the box, and in the future there will only be addresses, which look like folders, having no file name, and there will be no "loose" files, which are addressed directly, and generally no parameters passed though URLs using ? And &. This is not quite true, but at least goes for all general content like articles and blog posts.

But what then with all these old, odd URLs? I would hate for people to get a "page not found" for all these old pages, which almost all are still there somewhere, just handled by Drupal.

Well, I have handled situations like this before, for clients who migrated from other systems to Drupal, and already had a general plan and some useful code. I call my solution an .

The idea is to handle 404's using a three-step process:

- First look for the content based on the full failed old URL. On a 100% match, send people to the matching new page.

- Then look for possible candidates looking at the parts of the URL. If there's only one, send the user there, else compile a list.

- Lastly present the user with a 404-page, but let it contain the above list and some constructive help like a link to a search page and a sitemap.

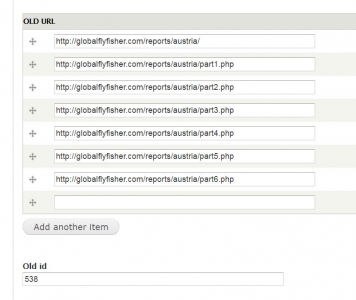

The first option requires

that all old URLs and old IDs are saved somewhere. And they are of course. All nodes and pages on the Global FlyFisher have a custom field called Old URL, which can hold one or several values. They simply contain the full URLs that used to point to what is now in the node. These were put there during the conversion and import of the old content, and can be updated manually as I find more missing links.

The system can then look for the failed URL in his field, find the proper node id and then simply send people on to that page.

Same thing goes for the IDs. All the old IDs are saved in a custom field called Old ID, and by looking for old IDs here, it's possible to find the current node that used to have this ID. If the failed URL has the pattern of an old review, blog post, keyword or whatnot with an ID argument, the system tries to locate the old ID in a suitable content type (blog, review, image) and on a positive find, it simply sends the user to the right node.

If nothing is found, the system looks in the old URL field and tries to locate a partial match there. Again a close hit will send the user on, while a failure will start a process where some gymnastics are done, stripping off index.htm|html|php etc., and the search is repeated.

This whole routine

is implemented in a pretty crude, but efficient way, namely as a block that's only shown on the 404-page. I have set up a custom 404-page per issue 3 above, and the block, made by a custom module, only shows on this page. The module is called gff_urls and in the function gff_urls_handle(), the system simply looks at the failed URL and tries to determine what it is, following the process described above.

If any of these searches result in a positive hit, the block sends a header 301 (moved permanently) followed by a header 200 (OK) and redirects the user to the new page. This cancels the 404, tells the browser (or search engine robot) that the old page is gone, and shows the proper page.

No 404, no errors, no disappointment, no agony. Updated search engines. Happy users! Yay!

If no certain match is found, and we get to this point, it's time to start poking around for other solutions. I split the URL at the slashes, so that /patterns/double-k-reverse-spider/index.php becomes patterns, double-k-reverse-spider and /patterns/muddler/deerhair.html becomes patterns, muddler, deerhair

I ignore index.* files and strip off extensions of all other files. This gives me some keys to search for. So it's off to Drupal's URL Alias table and find the best match. Comparing the parts of my missing URL with the parts of the alias column in this table can lead to a list of Drupal paths that are similar to what the user is looking for. Looking at the Drupal source for these URLs tells me what I'm looking at: taxonomy/term/ttt or node/nnn and I can load the term or node and present the user with a meaningful link.

These links are compiled into a list, which is presented together with a meaningful text and some other helpful links.

So altogether

this process leads to several good things:

- Fewer 404's that typically bother the users and hurt you in the search engines.

- Maintenance of old URL's, meaning that inbound links to old pages will still work. Again users will be happy and your good reputation will be maintained.

- Proper handling of old URL's for pages that still exists. By issuing a header 302, you tell the world that a page has moved and the world (read: search engines) can act properly.

On a site like GFF

with content reaching back to 1994 and a ton of links from outside pages this is quite important. Google says that more than a quarter of a million pages link to GFF – 257,000 to be precise – and that is pure gold when it comes to incoming visitors and search engine ranking. I dearly want to keep these links working, and make sure that people clicking one of these many links to the site aren't disappointed by a crummy 404-page, but get the page they are looking for – or at least some help to find something useful.

- Log in to post comments